Injecting Python Dependencies Using Lambda Layer and CodePipeline

Table of Contents

Benefits and Limitations of Lambda Layers

Managing dependencies can be difficult regardless of the type of application you are developing: desktop, mobile or cloud. AWS offers three ways of adding dependencies for Lambda functions:

- build a container image with all the dependencies installed

- pack them into the zip file together with function’s handler code

- move them into one or more lambda layers (zip file archives) and share it between multiple lambda functions.

The first two methods have one thing in common - they pull all dependencies each time the function handler code is built. It is especially tedious when you have multiple lambda functions sharing the same dependencies.

Luckily, the deployment time can be significantly reduced if the dependencies are moved into a separate lambda layer which is deployed only when the list of the dependencies is updated. Additional benefits of this solution are reduced costs of artifact storage and faster function invocation. However, even good things have limitations. Lambda layers are not an exception. The limits are:

- maximum 5 layers per lambda function

- maximum size of 250 MB for unzipped deployment package including all layers

- manual update of lambda layer version - when a new version of a lambda layer is created, configuration of the related lambda function must be updated manually.

The Path Forward

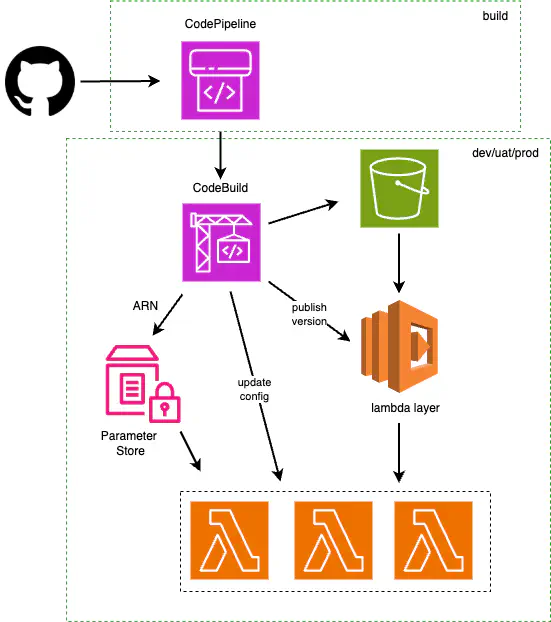

In this blog post, I am going to describe how to automate lambda layer versions using CodePipeline and CloudFormation. One of our projects requires several Python lambda functions sharing the same set of map processing packages. The size of the dependencies satisfied the lambda layer limitations. However, our developers were in a discovery mode, and the list of the required packages could change quite frequently, so we decided to automate lambda layer versions. At that time, we already had a large infrastructure built using CloudFormation and CodePipeline which determined our choice of tools.

Several manual steps required automation:

- creation of a zip file with the dependencies listed in requirements.txt

- uploading the zip file into S3 bucket

- publishing a new version of the lambda layer

- updating ARN of the lambda layer version stored in Parameter Store

- updating configuration of the related lambda function.

All these operations were performed by a CodeBuild project. Usually, we have several deployment environments including build, dev, uat and production. CodeBuild projects, CodePipeline pipelines, their service IAM roles and artifact buckets are deployed in a build environment. The CodeBuild projects run in the build environment as well. However, in this particular case, the CodeBuild project for lambda layer version automation was deployed in each environment (except for build) to simplify interaction with resources from these environments. The execution of CodeBuild project was controlled by a pipeline, which was triggered by the changes in requirements.txt file only.

With the second version of CodePipeline (PipelineType: V2) a trigger can be configured for a specific file, in our case requirements.txt.

Triggers:

- GitConfiguration:

SourceActionName: Source

Push:

- Branches:

Includes:

- !Ref SourceBranch

FilePaths:

Includes: requirements.txt

ProviderType: CodeStarSourceConnection

We have around 30 lambda functions in this project and chose to use one YAML file for each lambda function. The Parameter Store was used to pass the ARN of the current lambda layer version into lambda function templates:

Layers:

- !Sub '{{resolve:ssm:/${ParameterName}}}'

This parameter helps with synchronization. We changed the configurations of lambda functions both through their CloudFormation templates and using CodeBuild project, so one source of truth was required to decide what version of the lambda layer should be used for the current configuration.

CodeBuild Permissions

The service role of the CodeBuild project requires the following permissions:

- S3 permissions for multipart upload for the bucket storing the zip file

- Effect: Allow

Action:

- s3:PutObject

- s3:AbortMultipartUpload

Resource: !Sub arn:aws:s3:::${BucketName}/*

- Effect: Allow

Action:

- s3:GetBucketLocation

- s3:ListBucketMultipartUploads

Resource: !Sub arn:aws:s3:::${BucketName}

- Permissions to get and update/create lambda layer version

- Effect: Allow

Action: lambda:PublishLayerVersion

Resource: !Sub arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:layer:${LayerName}

- Effect: Allow

Action: lambda:GetLayerVersion

Resource: !Sub arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:layer:${LayerName}:*

- Permission to update lambda function configuration

- Effect: Allow

Action: lambda:UpdateFunctionConfiguration

Resource:

- !Sub arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:function:${FunctionName_1}

- !Sub arn:aws:lambda:${AWS::Region}:${AWS::AccountId}:function:${FunctionName_2}

- Permission to update Parameter Store parameter storing ARN of lambda layer version

- Effect: Allow

Action: ssm:PutParameter

Resource: !Sub arn:aws:ssm:${AWS::Region}:${AWS::AccountId}:parameter/${ParameterName}

- Permissions for the KMS key used for the bucket encryption

- Effect: Allow

Action:

- kms:Decrypt

- kms:GenerateDataKey*

Resource: !Ref S3EncryptionKey

Python Version and CodeBuild Image

Python packages are installed using a virtual environment. It is important to know which Python version can be used for a specific CodeBuild image. For example, we use an aws/codebuild/standard:7.0 image. According to the AWS documentation, the image should support Python versions from 3.9 to 3.13. In fact, at the moment of writing, the image had built-in Python 3.10 and venv had to be installed for this specific version.

Our installation phase included the following commands:

- apt update && apt upgrade -y && apt install -y python3.10-dev python3.10-venv jq libpq-dev

- mkdir -p lambda-layer/python/lib/python3.10/site-packages

- python3.10 -m venv venv3_10

- . venv3_10/bin/activate && pip install -r requirements.txt -t lambda-layer/python/lib/python3.10/site-packages

Make sure you are using a correct path to the content of your lambda layer (python/lib/python3.10/site-packages in our case). The path depends on the runtime. The same Python version must be used for compatible runtimes when a new version of the lambda layer is published.

Updates and Configurations

Lambda layer version pulls its content from the S3 bucket. Obviously, the bucket must exist before the first CodeBuild run. There are two options here. You can create one bucket in the build environment and store zip files for all environments there. In this case, the bucket policy must allow cross-account access. Alternatively, each environment can have its own bucket for lambda dependencies.

To make sure that the upload of dependencies archive is finished before publication of a new lambda layer version, we placed the S3 upload into the pre_build phase of the buildspec.

- cd ""$CODEBUILD_SRC_DIR"/lambda-layer"

- zip -r python.zip *

- aws s3 cp python.zip s3://$BUCKET_NAME/python.zip

All other steps were performed in the build phase.

The following command publishes the lambda layer version, parses the output and assigns the .LayerVersionArn value to the corresponding environment variable:

- export LAYER_VERSION_ARN=$(aws lambda publish-layer-version --layer-name $LAYER_NAME --content S3Bucket=$BUCKET_NAME,S3Key=python.zip --compatible-runtimes python3.10 | jq -r '.LayerVersionArn')

The lambda layer version ARN is then used to update the SSM parameter:

- aws ssm put-parameter --name $SSM_PARAMETER_NAME --value $LAYER_VERSION_ARN --overwrite

and the configurations of all lambda functions included in the LambdaNamesList parameter:

- for lambda_name in ${LambdaNamesList}; do aws lambda update-function-configuration --function-name "$lambda_name" \ --layers $LAYER_VERSION_ARN; done

Afterwords

Although automation of lambda layer deployment required additional effort, we found it worthwhile as it freed our developers and DevOps teams from the burden of manually updating the dependencies, publishing new layer versions and updating lambda configurations. As a bonus, the build time was significantly reduced further improving the development workflow.