Security is not your problem

Table of Contents

The sound and the fury of information security

The subject of information security is vast and can quickly become confusing. A lot has been written on the matter and the mountain of publications keeps growing with the stated principles and practices sprawling like branches on a tree:

-

a well-known CIA (Confidentiality, Integrity and Availability) triad got split into nine OECD’s “Guidelines for the Security of Information Systems and Networks” in 1992 through to 2002

-

in 2004 the NIST more than tripled them by producing 33 “Engineering Principles for Information Technology Security” (SP 800-27 Rev. A)

-

these were superseded by several revisions from then on, November 2017 through March 2018, with the principles giving way to “Security Engineering Activities and Tasks” of which the number has grown to over 150 by now

There have also emerged a great deal of security certification standards: PCI-DSS, HIPAA, SSAE (SOC),.. and a handful from ISO. The ISO/IEC 27000 series - most probably the talk of the town for their all-inclusiveness - has got so many pages in the manuals that it will likely take months to read them all (and pay per word it seems - judging from the price of all materials!), so it is considered a normal practice to hire consultants in order to let them walk you through this quagmire.

No wonder there are numerous memes in the world of IT on the topic ranging from “Getting around to security next month” to “What is security? How to ignore it and deliver your project”. Yet another, a no lesser known meme is the “Security budget before the breach and after” and it tells unequivocally that security is important and it’s better to address it early on - unlike the bulk of things good enough to be done “later than never”.

To the unitiated this state of affairs might seem to be impenetrable or perplexing to say the least. However, it does not have to be so. Going back to the basics should help to see “through the forest” and understand how it all comes together and suddenly starts to make perfect sense, in contrast to the Bard’s parable of life in Macbeth; that’s “full of sound and fury, signifying nothing”.

What’s mid and centre of information flow

For starters, the security of information that is stored on, processed by and transmitted between different systems, is a process of protecting it from various threats throughout the whole lifecycle and ensuring its delivery to the destined parties in its integrity - with an untampered form and content. Please note the word process here - it will be a repeated theme throughout this piece.

There are a few important things openly expressed in the above definition and some that are implicit:

-

regardless of whether the information is in flux or stored for archival or compliance purposes, at some point it’s going to be accessed, so made available for retrieval

-

of great importance is the fact that the information has not been doctored and kept integral while being stored or in the process of its transmission

-

we want to make sure a person who accesses the information has the right to do so - in other words, the information is also kept confidential

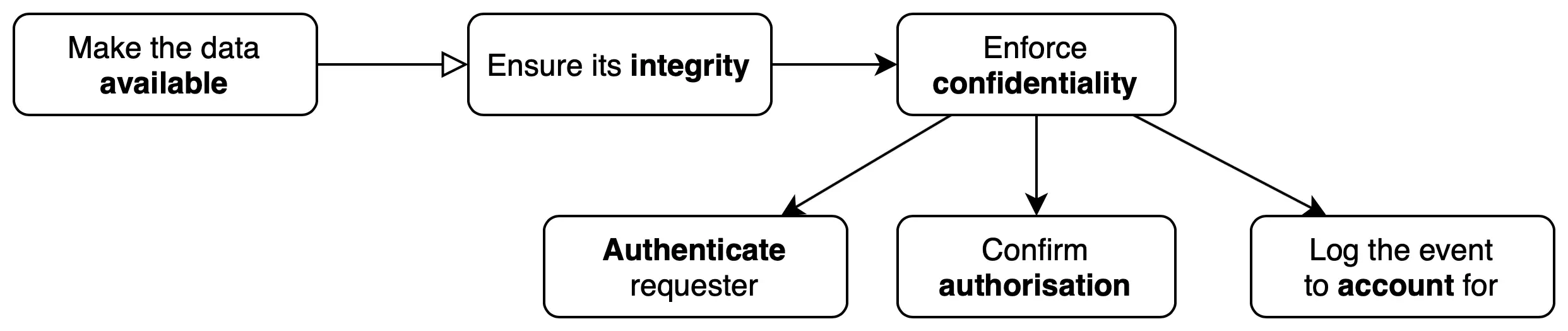

As one should readily see, the above has listed confidentiality, integrity and availability principles in reverse - albeit more natural order: the data is made available, kept integral and only those entitled to use it are allowed permission to do so. Getting rid of the catchy but controversial CIA abbreviation is also a bonus, if you ask me! Even though the AIC acronym might be harder to remember, it should be unnecessary since it is not difficult to visualise the logical sequence of information access.

And if you think about it: isn’t it the core of any information processing system? I’m going to reinforce this in further narrative.

The confidentiality requirement deserves a bit more attention, and it is easier to explore by following a simple example of a hackneyed user Alice trying to access a piece of information:

-

Before Alice can request the data she’s interested in, she needs to prove her identity to the system: authenticate herself

-

Once the system verifies that she’s “Alice” indeed, it will need to confirm that she has the right to access the information, or is authorised to do so

-

Regardless of the outcome of the authorisation step, the fact of the attempted access should be logged and stored somewhere where it cannot be altered, so that Alice could be accounted for her actions

The preceding example forms the basis of yet another cornerstone of information security, also known as a triple-A: Authentication, Authorisation and Accountability. Holding someone accountable for their actions is not plain sailing though, as Alice can deny her involvement in the precedent shifting responsibility to a third party faking her identity. Thus it is paramount to design the authentication procedure in such a way that:

-

it is extremely complicated for intruders to pretend they are a user authorised to access confidential information (aka steal their identity)

-

consequently, it is equally hard for a user to renounce association with the actions logged as theirs

In the information security parlance this is known as the principle of non-repudiation. Putting it all together, a simplified information access flow diagram looks like the following:

The logging step is a necessary but not sufficient provision to counter any repudiation claims, as has already been shown.

To be secure is trying to stay safe

I was born in Russia, got my higher education there, and spent my post-uni years in Central Europe where I learnt Slovak, Czech and some German too. In each of the mentioned tongues there is just one word denoting both “safety” and “security” concepts: respectively,

-

“безопасность” (“bezopasnosť”) in Russian: “the absence or elimination of danger”

-

“bezpečnosť” in Slovak and Czech - from the negation of old verb “pečovať”: “the lack of need to look after”

-

and German “Sicherheit”: “reliability, authenticity, safety”

Both of the English words - “security” and “safety” - are derived from French (and consequently - Latin)- and the translation of their cognate origins reveals subtle distinction between them:

-

“security”: from old French “securite”, Latin “securitas” from “securus” - “free from care”

-

“safety”: from old French “sauvete”, Latin “salvitas” from “salvus” - “intact, whole”

It’s interesting to note that the translation of the Latin equivalent of the word “safety” actually refers to integrity, how the German name encompasses almost the whole gist of the “basic security triad”, and also that labelling something “secure” implies that one can finally stop worrying about it [also in the western Slavic languages - talk about cultures! ;-)]. Now, I don’t know about you, but from my standpoint keeping things secure in the sense discussed up to now is an activity that is far from being carefree: unless, of course one literally locks them away in a physical safe (and even then there is a risk of someone breaking in).

Maybe this is one of the reasons security is being overlooked so often, coming as an afterthought, as a one-off affair that is rarely taken as a continuous process (a process - remember?). Time and again we are concerned with delivering projects, meeting deadlines and performing, neglecting the safety of the information, direct and indirect threats we might be imposing it to and the bad actors that only reveal themselves by the time it is too late. Almost like the frog in the picture under the heading of this article. Under this scenario, security becomes an annoyance, a problem to solve later in time, and on an unfortunate occasion - the time least suitable for any security related work - when the disaster has already struck.

I guess by now it might become clear that I wanted to make a point by adding a cheeky heading how security is not your “problem”. It’s not your problem because it is at the core of what you do, it is front and centre of an information processing system you design and it should always be on your mind while you are writing this line of code or configuring that particular service to integrate with your solution. You should have a mental - or maybe - a virtual checklist to tick off the items in it to gauge how secure is the component you are currently working on. The general principles discussed in the previous section will get you a long way with that. And to manage the process on a bigger scale - especially if you work in a particular industry - security standards are there for your perusal.

Security standards: control and honker

The main reason to have standards - any standards for that matter - is to make certain that those who adhere to them are following a common set of well-defined practices and protocols. Security standards in particular allow one to quickly assess how well a company or their product or service manage the risks defined in the body of a standard. It identifies the threats, classifies them on the scale of their severity and rate of occurrence, and requires one to put some controls in place that either eliminate or mitigate the danger. The many standards, while obviously covering a lot of common ground, will also place different emphasis on various threats and areas they manifest in, depending on the industry they’ve got as their origin:

-

PCI-DSS: Payment Card Industry Data Security Standard is mainly for creating levels of protection for card issuers by ensuring the merchants meet minimum required levels of protection when storing, processing and transmitting cardholder data

-

HIPAA: Health Insurance Portability and Accountability Act enacted to improve the flow of healthcare information and to regulate how the personally identifiable information is handled by healthcare and health insurance providers, and to protect that information from fraud and identity theft

-

SSAE 18: American Institute of Certified Public Accountants auditing Statement on Standards for Attestation Engagements, more widely known under the abbreviation for the reports one has to produce in order to be certified: SOC and SOC-2 after System and Organization Controls. These reports are intended for use by service organisations, or companies providing information systems as a service to others, to serve as a proof of the presence of internal controls over the information systems on the basis of which those services are provided

-

ISO-27001: kind of “international variant” of the SSAE 18, part of the ISO-27000 family of information security standards, it prescribes to arrange all controls into a system - referred to as an Information Security Management System or ISMS and encompasses not just the cybersecurity aspect of it. It is arguably the most general and overarching information security standard of all, covering also the general security oversight of a company - for a good reason

So security standards dictate one to acknowledge the known potential risks and the ways they apply to their particular circumstances and to commit to start managing them. Some standards will go hard on you and command to provision an established security practice or service to address some of the most critical vulnerabilities. Some will allow to simply become aware of the risk and set a promise to adjust your security stance on it in the future. There might also be exposures which do not apply to your current situation, but that still needs to be explicitly articulated: they can become relevant in the time to come.

As an old saying goes, “with the awareness comes enablement” - and that is basically the premise of any security standard.

Containing exposure

Have you ever been asked at the job interview to describe how the information is transmitted over the internet? The one of “Please give me a rundown of how a web page gets displayed in the browser”? It has been a popular one in the recent past. It’s amazing to see how deep one can go in illustrating the process. Well, now that we are hopefully on the same page on the role security plays in the process, I can ask this question with a focus on how to make that same information flow safe and we can go down a similar rabbit hole.

Once a URL is typed into the browser’s address bar and the “Enter” is hit, the name of the web server is looked up in the DNS in order to resolve it to one or more IP “server” addresses to retrieve the web page from, and the first threat is exposed: what if the supplied address does not belong to the server(s) hosting the web page you are after? One of the attacks is aimed at exactly this and is called “DNS spoofing” (or “poisoning”). It is used as means to redirect the traffic from the original “good site” to a malicious one, with the purpose of stealing victim’s personal data, tricking them to install malware of some sorts on their device and so on and so forth. Pretending to be someone or something legitimate is a common pattern in these types of attacks and a guard against them is strong authentication: from DNS servers which resolve the names into addresses - through DNSSEC digitally signed protected zones - to the server’s SSL certificate confirming it is indeed serving the domain name the certificate has been issued for. The latter is only possible if a secure protocol (HTTPS in this case) is used for communication between the server and the client browser.

Another form of attack is the denial of service - or DOS - done by flooding the target with requests in a way that prevents the normal operation of the affected services. Its name might be more familiar to many as DDOS - with an extra “D” in front standing for “distributed”, when it is amplified by making the request packets to be sent from all over the world through a variety of reflection and ping-back techniques. Needless to say that this form of attack can be performed on any level and can propagate all the way down the information transfer chain - from the initial DNS resolution stage, to the load balancer and applications behind it, through to all other dependent services.

There’s rarely a web service today that does not employ a database in its backend which is another direction a perpetrator might choose in an attempt to extract the information stored there. “SQL injection” is one of the methods to acquire access to the relational database through an application or service talking to it, thus it is important to filter user-generated inputs passed through the frontend. Another point of concern is the authentication credentials used by the service to connect to the database (or any other service or even part of the hosting platform for that matter): what happens if in spite of all protection, the intruders gain access to the database/service/platform through the use of that compromised service identity? What kind of access will they gain? Hopefully not an administrator’s level!

I have touched upon just a handful of “front door” attacks, yet there are many more other potential exposures that might compromise an otherwise well-designed information system, just to name a few:

-

access to computing units (servers, containers, etc) hosting an application / service: is there another way to access the servers through some type of administration interface? How easy it is to discover and get the right of entry?

-

is the traffic between system components deemed inaccessible from outside still encrypted? What happens if malefactors find a way to position themselves inside the “secure perimeter”?

-

are there any external or third-party components your system relies on and how secure are they?

Although it is possible to continue the list, it should already be clear that there are multiple facets or better said “containers” of security. It is crucial to set the protection on each of them so as if there is no other secure container outside of it. Think of them as of matryoshka dolls, one inside the other, with your information being stored within the innermost one. This is called a “zero trust model” of security and will be spoken of in the next section, alongside some other no less valuable measures, but I thought it deserved an early mention of its own here.

It should also be evident in my opinion that shielding each of the “containers” is a coordinated effort, and can never be done by a single person. The proverbial “security guy” can only ask you some questions along the lines of “have you thought of this scenario?” - but it is up to everyone responsible for their own “container” to implement an actual and appropriate answer to that question.

Call for action

There is certainly no need to wait for questions from the “security guy” in order to make your container if not bulletproof then at least armour plated. As noted previously, the general principles form the foundation of any secure design, but sometimes it is beneficial to list actionable items derived from those concepts in front of you, and also to provide some examples.

Segregate

It should come as no surprise to have segregation as first on the list in the context of “security containers”. Contain the threat and never let it spread around. Examples of segregation are:

-

network separation into subnets, with carefully designed route tables allowing only defined traffic between certain subnet classes

-

making your system to be composed of logical components handling their own specialised domains, not “smearing” their scope

-

using different credentials with distinct access rights to a service for individual parts of system / application

Grant the least possible privilege

Hand-in-hand with segregation goes the principle of the least privilege: a well-established rule of thumb to grant only the absolutely necessary rights for accessing a service or information, limiting them in scope to a level of functionality required by the component of the system in question. One of the good examples already mentioned above is providing just enough permissions for credentials assigned to the service to access some data store: does it need to read only particular set of records from a given database? So allow it to do so, but do not provide the write access or - even worse - admin rights to your whole database service.

Trust no one

Trusting no one, also known as “zero trust principle” is a concept that can perhaps be communicated visually with a reference to the medieval castle defence design, where after surmounting a fortification an enemy is met with yet another one. The archers on the top of the outer walls and a moat in front of them, the narrow space for battlefield between the outer and inner walls of the castle and further bowmen on the top, the guards on the inner walls pouring the tar on the heads of those who climb them, fighting with swords the ones who succeed, and more of the same inside the castle, with the last stronghold being the keep. The structure was conceived to sustain a fierce onslaught of the foes and one never assumed any of the outer “defence rings” was protective enough not to be vigilant on the inside.

Same holds for your “security container”. Design it carefully and fully own its security as if the adversary is at its front door. Don’t underestimate the risk of it actually happening! Relying on the “outer container” to keep all threats at bay is akin giving the keys to the kingdom to someone you do not know well, or put it another way, placing the keys in a safe you have no control of.

Encrypt by default

Building on top of zero trust principle, it is indispensable to encrypt the information on all levels, inside each of the containers independently at rest, and in transit during the information exchange between them. If you don’t trust the outer container not to be compromised, you need to authenticate any entities originating in it before granting them access. Same goes for the encryption at rest: in case your own container is compromised, the data is still protected by virtue of being encrypted with a key that only those who are authorised to do so will be able to use for decryption.

Design for failure

The information needs to be accessible at all times so it pays off to invest in architectures which are resilient to malfunction and capacity issues caused by deliberate attacks (such as DDOS). Get your load balancer to reject all irrelevant packets and absorb legitimate traffic, while your application servers or containers scale up during peak demand and scale down when things are back to normal. Employ some caching solution between your application and the database, and use a queuing system in order to process all of the critical events without losing any.

Be ready for disasters, when the infrastructure you are relying on goes bust. You are better off automating any fail-overs and making them happen in the background as part of the normal operating procedure.

Keep a watchful eye

With everything going on, logging and monitoring is a big part of the process, taking a pivotal role in the fault-tolerance and auto-recovering procedures described just above: how else would you implement them if not using the metrics exported by various parts of your system?

Collecting the logs for auditing is one thing, but in order to be proactive one needs to be able to analyse them and make decisions in real time. Especially when there are so many moving parts in play and the “container methodology” is in use. That’s where we come to the pinnacle of the security response: its automation.

Automate incidents detection and response

You don’t want to trawl the logs by hand nor do you want to be alerted on something that can be fixed programmatically without your intervention. The best course of action is to automatically remediate any “regular” type of security issues, such as a grant of excessive rights to an entity, discovery of an undesired port open to public, an unencrypted data store or a backup, weak password etc - log it, and notify the interested party about the action taken; and not set off an alarm to yourself to rectify the situation manually.

What to do with more complex cases which are also hard to detect by examining just a single log entry or a set of metrics? How to look for patterns of intrusion, or signs of subtle degradation that might indicate larger troubles looming on the horizon? Turns out, these type of issues fit quite well into the category of problems machine learning shines to solve. And really good news is, if your infrastructure utilises one of the major cloud computing platforms such as AWS, there is now a set of essentially “black box” tools designed to tackle exactly these type of tasks - effectively and without you having to possess any knowledge of the underlying methods.

This is not the end

I promised to get a down-to-earth review of information security, cutting through the jargon and I hope that I delivered the message. I have explained the importance of security from the first principles and proved its importance as the core of information processing. We have looked at security standards, defining their place and role in the overall landscape, their usefulness and shortcomings. I have then described the concept of “security containers” and why it is so helpful to operate in that paradigm, providing a list of actionable measures for anyone to embark on the course of evaluating and hardening their systems starting from today into the foreseeable future. Even if you cannot implement the “gold standard” solution in order to address some of your findings, you should be armed with the knowledge and ability to build a structure around working your way up, with checks and routines, keeping an eye on all of your “defence rings” and building them up - as part of the process.

As with anything in general, success does not come from sheer determination or reaching some random goalposts or milestones. It comes from developing useful habits and sticking with them. So please stop trying to solve your problems with security, and start making security your trait.